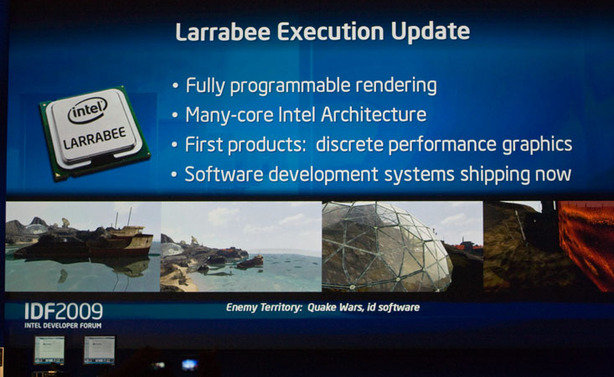

More on Larrabee

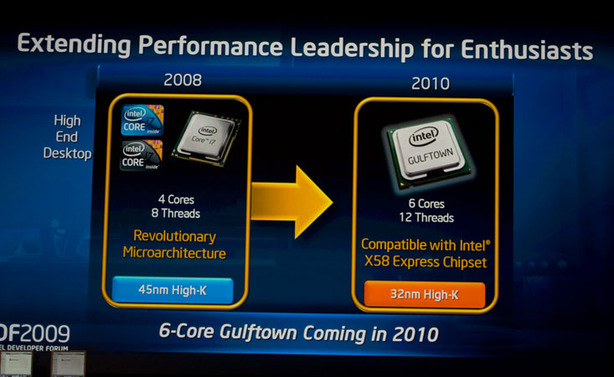

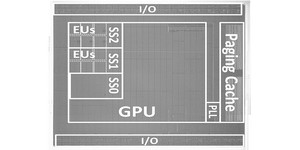

Maloney confirmed that the first products based on Larrabee would be discrete graphics cards, and also revealed that the Larrabee architecture will eventually be integrated into the CPU. The codename for that chip is Haswell, and will be built on Intel's just-revealed 22nm process - it's at least two or three years away.Sean also added that software development systems featuring Larrabee have been sent to key developers (we'd imagine one of those is a certain Tim Sweeney).

When you consider that no game on the market today (and, we'd imagine, by the time Larrabee launches next year) will use ray tracing extensively - what matters for a discrete graphics card is how it performs in Direct3D. I still don't get Intel's obsession with ray tracing, but all that the demo proved was that Larrabee is unlikely to change the landscape when it comes to real-time ray-traced graphics - Larrabee just isn't powerful enough based on what we saw during Maloney's keynote.

We're still left with that age-old question... will it run Crysis? Right now, I don't know, but we're not getting our hopes up about Larrabee's 3D grapihcs performance relative to, say, the Radeon HD 5870, when it's released.

In our discussions with Intel after the keynote, we were told that the demo was merely a proof of functionality, but that doesn't really change our initial impressions. The frame rate can be excused to some extent, because the chip was undoubtedly not running at full performance, but Intel knew that and should have factored it in to ensure the frame rate was at least smooth. This should have been done even if it meant reducing the complexity of the scene a little bit. What can't be excused though was the demo itself.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.